‘Math for Programmers’ review

Aaron Cutter, of the Actuaries Institute’s Data Analytics Practice Committee, provides a detailed review of Math for Programmers in the context of learning Python for Data Analytics.

I don’t know if you have found machine learning and programming books as dry as I have? It’s sometimes difficult to get enthused reading a book that starts off with yet another ‘hello world’ code example. However, when concepts are familiar and the coding shows you a different way to tackle maths problems that you (may) remember from uni days, then this could be the light switch moment that brings code to life.

That’s what attracted me to read a book entitled Math for Programmers—not to learn maths but to see simple maths concepts turned into code and to use that information to improve my appreciation and knowledge of code and programming concepts. Therefore, if you are newish to programming, and in particular Python, I would recommend Math for Programmers – 3D graphics, machine learning, and simulation with Python” by Paul Orland.

For me, Math for Programmers wasn’t really a book about learning maths. Instead, it was a way to learn more about Python while using familiar maths concepts in linear algebra and calculus to appreciate what Python code is actually doing. Having said that, the book did provide a refresher on the product and chain rules and partial differentiation.

While I got a programming tutorial out of the book, others may get a look at the early fundamentals of machine learning. If you are new to both coding and machine learning but can remember basic linear algebra and calculus then you can whiz through the text, code up the maths and check your understanding of Python coding in the early sections. In the last third of the book you’ll learn the building blocks for some of the popular machine learning algorithms.

There is quite a large portion of the book devoted to vector manipulation. To make the code simpler, a bunch of functions are defined. If you’re not familiar with Python’s advanced function capability then a brush up may be necessary. I supplemented my understanding of Python functions by reading A Beginners Guide to Python 3 Programming.

After reading Math for Programmers, I had a go at manipulating an image file using vector operators. There’s a simple pleasure obtained when you can see in pictures the effects of your code. For example, I used the following code to subtract an image from pure white to reveal its inverse or negative.

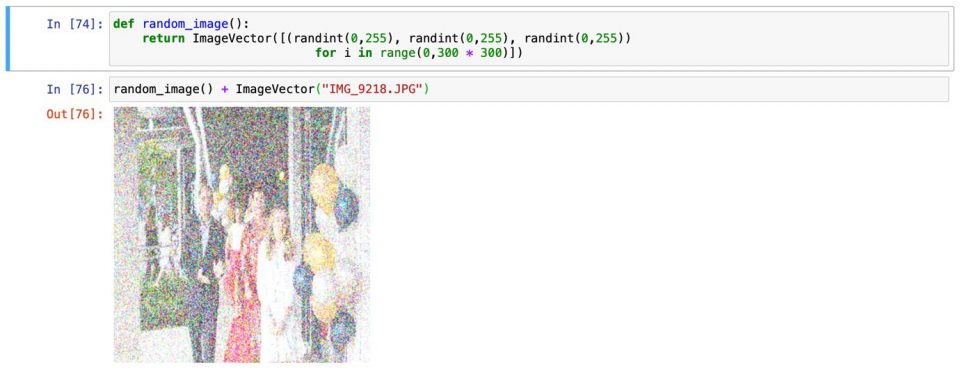

I then used the following code to add a random amount to each of the RGB components of each pixel in the image. In my mind, this perfectly simulated the TV reception available in country Victoria in the 70s and 80s.

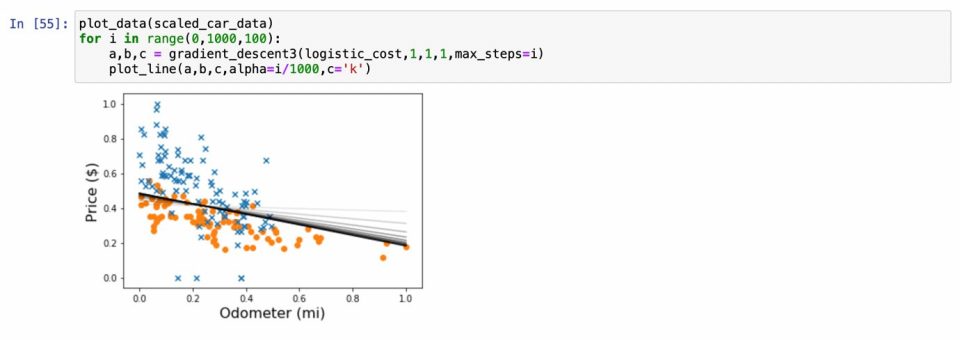

The book contained a lot of sample code to explain concepts. For example, in the machine learning section, the following code creates a gradient descent model using a logistic classifier to find the greatest separation between BMW and Prius cars.

The machine learning techniques taught in the book are very relevant to actuaries. For example, classification problems are common-place in the real world to solve problems like triaging workers’ compensation claims or defining target markets for ads or product features.

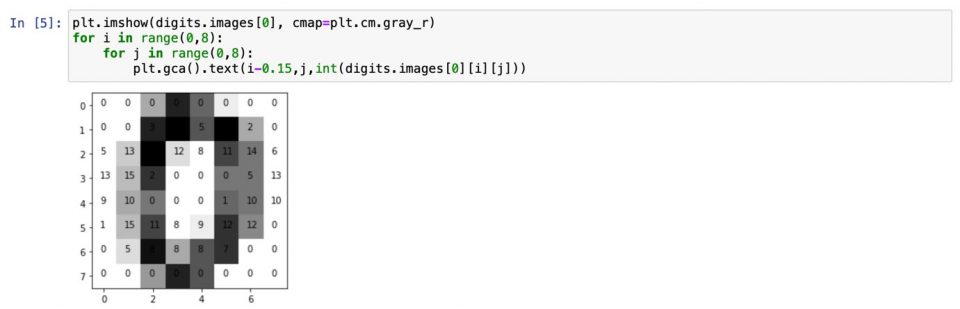

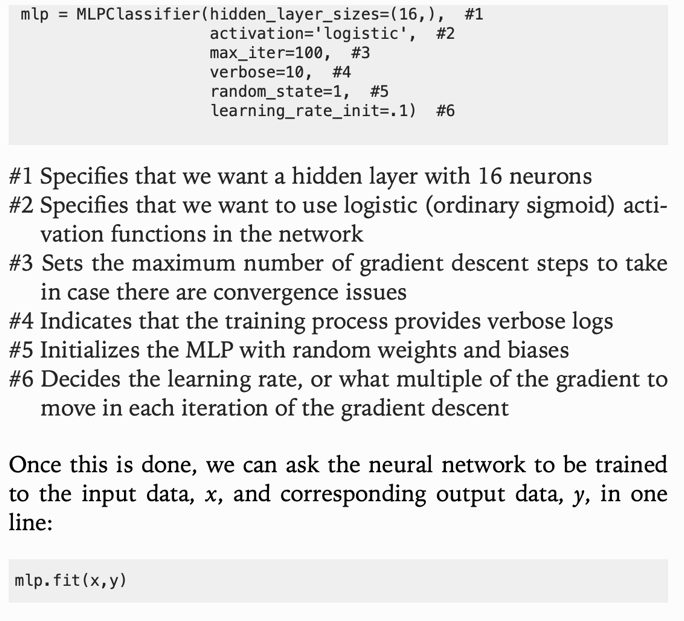

The last part of the book steps through a Multi-layer Perceptron (MLP) classifier for hand-written digit recognition. Here is some sample code from this section of the book:

MLP classifiers are a simple form of neural networks which are found everywhere today. For example, facial recognition can be used to keep shop front doors locked to people not wearing masks— this is a real example from my son’s work. Facial recognition algorithms typically use some form of neural network.

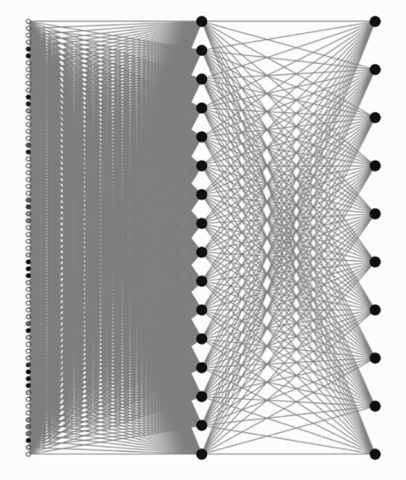

The book makes heavy use of visualisations, including to help readers step through the data, weights and biases in each layer of a neural network. Here is an example of one of the neural network visualisations from the book:

The book touches on the important steps of creating a neural network, including calculating activation layer by layer (feedforward method) and calculating gradients with backpropagation to train the neural network. Python’s Scikitlearn module uses the MLPClassifier to automate a lot of these steps. The book explains the use of the MLPClassifier, such as in the following code excerpt:

When you get to the end of the machine learning sections, you may gain more appreciation of what’s going on within the functions that you use on a daily basis. For example, many machine learning algorithms require maximizing or minimizing functions in multiple dimensions. Being able to calculate gradients in three and higher dimensions therefore becomes a prerequisite to being able to understand what is going on inside algorithms like Gradient Boosting Machines.

I wouldn’t be surprised if 100 different readers get 100 different things out of this book. It’s a pretty easy read if you want to skim it. Even with a skim I’d say that you will pick up snippets of useful learning.

If you’re already hard core in either machine learning theory or programming, this book probably isn’t for you. But if you have a passing interest in learning Python or you’re looking for your first foray into machine learning then this book is definitely worth a shot.

CPD: Actuaries Institute Members can claim two CPD points for every hour of reading articles on Actuaries Digital.